If you have been following Power & Grace’s Instagram or this blog, you will notice we have been starting to provide more information and content on how our coaches use athlete data to aid in decision making. Possibly as a weightlifting coach you are planning on acquiring something cost-efficient and simple like a jump mat, or maybe you already implement a (or multiple) instruments (e.g., jump mat, bar velocity units, force plates) for monitoring your athletes. I may possess some bias, but I believe doing so is an excellent decision! Before getting elbow deep in athlete data, however, I want to discuss why it is imperative that a standard operating procedure (SOP) be designed prior to collecting data. An SOP will provide higher quality data which will lead to superior analyses, ultimately providing you, the coach, with a better insight into your athlete’s physiology. For this example, I will consider implementing a jump mat and then delve into Power & Grace’s jump mat SOP and its importance.

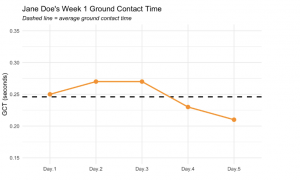

Picture this: the jump mat you ordered just arrived, you are excited to turn that bad boy on and throw some athletes onto it. Maybe you saw Angela talk about the drop vertical jump (DVJ) testing over at Power & Grace HQ and plan to have your athletes perform the DVJ – you begin testing your athletes every training session! After a week you look at DVJ performance fluctuations for one of your athletes, Jane Doe:

We can see Jane Doe has ground contact time (GCT) data points above and below her average for this metric (for the sake of simplicity: lower GCT = better; higher GCT = worse). You eyeball this data visual and think, “wow, Jane Doe’s GCT was higher than average on Days 2 and 3.” Well not so fast! There is a key component of data collection that has been overlooked: measurement error. The data points collected during testing are not actually the true scores but rather the observed scores formed from the equation Observedscore= Truescore + εscore, where ε is the measurement error surrounding the true score (1,2). There is inherent measurement error when conducting any test, therefore the observed score a test provides can never be interpreted as the hypothetical true score. The measurement error is a result of two factors: instrumentation noise and biological noise. Keeping things brief, instrumentation noise is measurement error caused by the testing instrument itself and biological noise is measurement error caused by biological processes (e.g., sleep, nutrition, mood, etc.) (1,2).When monitoring athlete data, it is important to account for measurement error and be able to view and interpret the uncertainty around each data point. Calculating and using measurement error is an entire discussion for another time, so let us focus back on why an SOP is important.

The necessity of an SOP stems from the desire to minimize measurement error. Given the previous formula, a lower measurement error means the observed score collected is closer to the hypothetical true score. Let us consider a summary of the Power & Grace jump mat SOP:

- Pre-Collection

- Athletes always perform the DVJ testing prior to the start of their afternoon training session (or before the single session on Saturdays).

- Athlete performs standard warm-up up until they start moving a barbell

- Drop Jump Collection

- Standardized box height

- We use a 15cm box

- Standardized instructions

- “Drop off the box and jump as high as you can as quickly as you.”

- Athlete performs 3 maximal effort DVJ trials

- Hands on hips

- The first trial is always discarded (more on that later)

- Never “coach up” the athlete on technical changes that may maximize their jump for the given testing session

- Instruction should be provided, however, if test is being performed incorrectly.

- Standardized box height

The goal of this SOP is to minimize the instrumentation and biological noise, thereby reducing measurement error and providing us with a more precise observed score. Let us look at an example of how the SOP has done this.

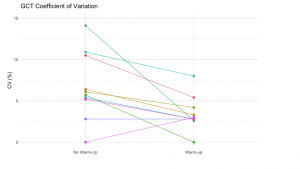

Part of the Power & Grace jump mat DVJ SOP is the athlete performing three maximal effort trials, with the first trial being discarded. Why? I analyzed 10 athletes’ coefficient of variation (CV) for the DVJ metrics when the first trial was discarded and when it was used. Briefly, CV is calculated by dividing the standard deviation by the mean for a vector of units, thus providing a unitless measure of data dispersion. This is useful in this instance as to measure the dispersion of each athlete’s DVJ trials within the session, where we would expect the two trials to be nearly identical since no physiological changes would be expected between trials; between-trial variability could therefore be considered an indication of measurement error since the variability likely isn’t the result of physiological alterations. Three DVJ trials were performed according to the SOP, with CVs calculated for Trials 1 and 2 (“No Warm-up”) and then CVs calculated for Trials 2 and 3 (“Warm-up”) for each athlete.

Examining this figure of CVs for GCT, it is likely that performing three trials and only using the latter two trials minimizes variability between trials – a potential indication of decreased measurement error. Why? Allowing the athlete to perform a maximal effort DVJ prior to the final two trials that we log in our database is possibly preparing the athlete’s body to perform a maximal DVJ – this would be an example of the SOP lowering measurement error by minimizing biological noise!

We could continue to dive further into this topic; however, this blog is already extensive so I will wrap it up. Hopefully, this helped inform on why an SOP is essential. Note that for the specific example of a jump mat, I have found our data to be rather noisy which is even more reason to find ways to minimize measurement error. Soon, I will discuss how you can harness measurement error to express uncertainty around data points (like the ones seen in the first figure), providing a range of plausible test scores. To learn more on this topic, I recommend reading section 1, Establishing Plausible Baseline Values (True Scores), of the Swinton et al. (2018) paper cited below.

Jake Slaton

1. Swinton, P, Hemingway, BS, Gallagher, I, and Dolan, E. Statistical Methods to Reduce the Effects of Measurement Error in Sport and Exercise: A Guide for Practitioners and Applied Researchers. , 2023.Available from: https://sportrxiv.org/index.php/server/preprint/view/247

2. Swinton, PA, Hemingway, BS, Saunders, B, Gualano, B, and Dolan, E. A statistical framework to interpret individual response to intervention: paving the way for personalized nutrition and exercise prescription. Front Nutr 5: 41, 2018.